Architecture Drift in GitHub PRs — Detect Structural Pattern Breaks Linters Miss

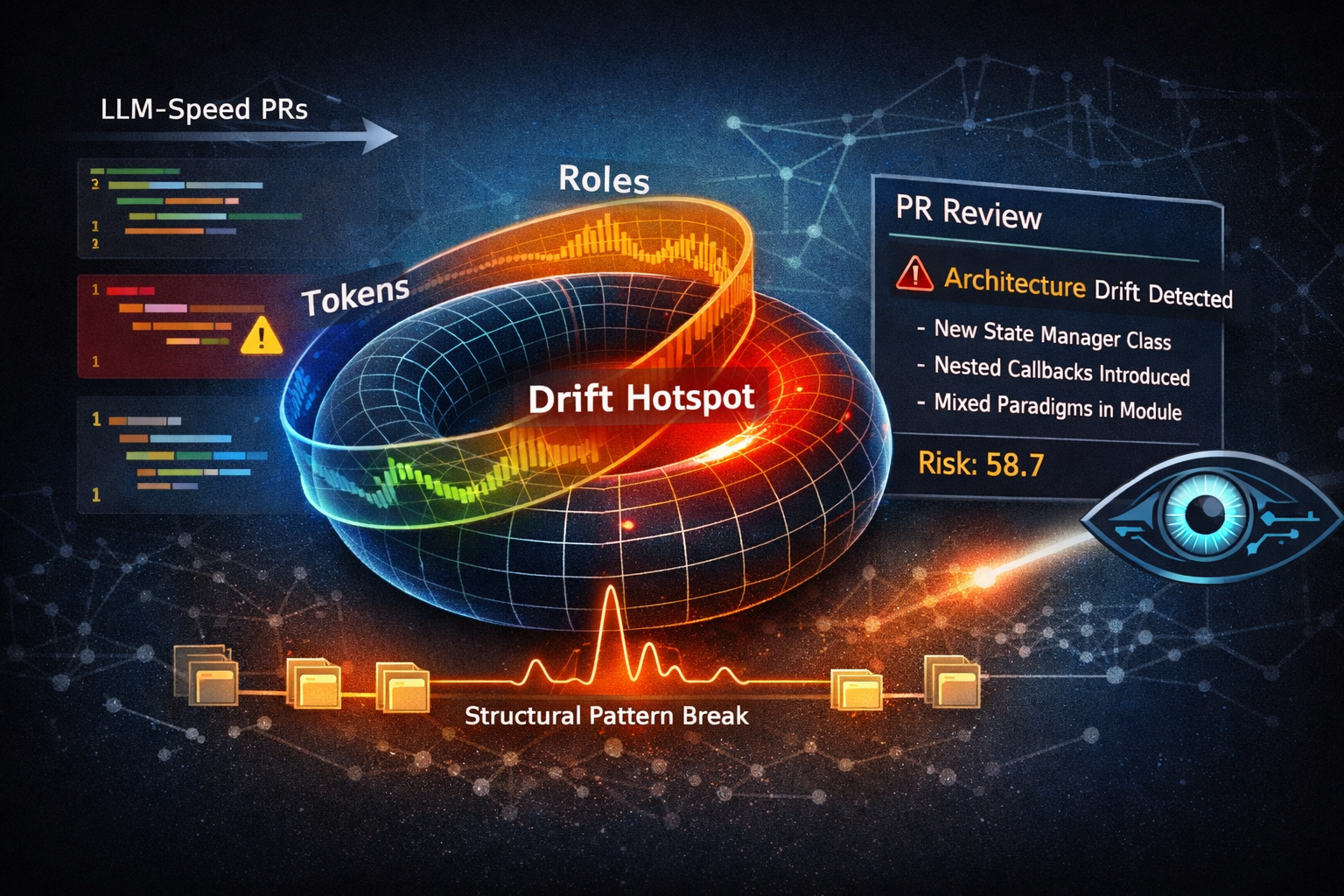

Catch architecture drift in pull requests. Repo-specific baseline highlights structural pattern breaks (state, control flow, abstractions) and hotspots in PR comments — built for modern LLM-speed code changes.

Architecture Drift Radar in Every Pull Request

LLMs increased PR throughput. Architecture review didn’t scale.

Revieko flags structural pattern breaks in PRs — the kinds of changes that pass linters and tests, look “clean”, but quietly push a codebase away from its established architecture and team conventions.

What you get in PR comments:

- PR risk score + per-file risk

- Hotspots (line-level) where structure deviates most

- A repo-specific baseline learned from your own history — not generic “best practices”

- Low-noise signals designed for real code review

Install: https://github.com/apps/revieko-architecture-drift-radar

Demo: https://github.com/synqratech/revieko-demo-python

Pilot: https://synqra.tech/revieko

The problem: PR speed grew; architecture review stayed local

Modern teams ship faster because code is cheaper to produce:

- LLM-assisted implementation

- more parallel changes

- larger diffs and shorter review windows

But architecture doesn’t usually fail on syntax. It fails when PRs introduce new structural habits that the repo never had — and the team doesn’t notice until maintainability and change-cost spike.

You can ship code that:

- follows style rules,

- compiles,

- passes tests,

- even gets “LGTM” from an AI reviewer…

…and still moves the codebase toward:

- higher coupling,

- more statefulness,

- deeper/nested control flow,

- ad-hoc recovery/strategy logic,

- mixed paradigms (functional ↔ OOP),

- inconsistent module boundaries.

That’s architecture drift: not “wrong code” — off-pattern structure.

What “architecture drift” means to engineers

In practical engineering terms, drift shows up as structural pattern breaks such as:

1) New stateful abstractions inside previously simple flows

Examples:

- introducing a private state manager class

- adding caching/history/hidden state to a component that used to be stateless

Why it matters:

- changes invariants

- increases implicit dependencies

- makes behavior harder to reason about during review

2) Control-flow shape changes

Examples:

- adding nested callbacks, closures, or nested functions

- retry loops + recovery layers

- branching structures that reshape the function’s “shape” without changing outputs

Why it matters:

- raises cognitive complexity

- makes bugs more likely in edge cases

- review time increases disproportionally

3) Paradigm shifts inside a file/module

Examples:

- introducing generator pipelines (

yield, filtered generators) - higher-order functions and lambdas inside loops

- “Strategy pattern” style recovery lists instead of direct logic

Why it matters:

- the module becomes harder to maintain by the rest of the team

- creates local “mini-frameworks” that don’t match the repo’s conventions

4) Layering and boundary erosion

Examples:

- business logic mixing with error orchestration

- configuration/state leaking into core loops

- utility logic creeping into high-level modules

Why it matters:

- architecture becomes implicit and inconsistent

- future changes require more context and coordination

Key point: drift is often a style-of-architecture issue — the repo’s established structural habits (what the team naturally expects) get violated by a PR.

Why linters, tests, and typical AI review miss this

Linters / formatters

Great for:

- syntax, formatting, static rule checks

Weak for:

- detecting that a PR introduces a new kind of structure the repo historically doesn’t use

(e.g., callbacks + generators + strategy pattern in a codebase that never used them)

Tests

Great for:

- catching behavior regressions

Weak for:

- architecture and maintainability regressions that still “work”

- changes that increase future change-cost without breaking current correctness

Typical AI code review

Great for:

- summarizing diffs

- suggesting local improvements and style fixes

Weak for:

- repo-specific norms over time

- “this pattern never existed in this repo” signals

- global architecture drift across files/modules

(and in practice, AI reviewers still tend to be diff-local)

A concrete example engineers immediately recognize:

A senior dev introduces a “clean” design pattern (Visitor/Strategy/etc.).

Generic review says “nice”.

But the repo has zero instances of that pattern for years.

The team later pays the maintenance tax.

Revieko is built exactly for that gap: architectural consistency checking.

The core intuition: architecture is visible through 3 channels (token / role / depth)

We model a code change not just by “what tokens changed”, but by how the change reshapes structure across three signals:

1) Token channel — the concrete symbols and identifiers used

2) Role channel — the structural role of each piece (branching, assignment, call, return, block boundary, etc.)

3) Depth channel — where it lives in the nesting/context stack

A simplified illustration:

token: if x < 0 : return False

role: BR ID OP NUM CL RET LIT

depth: 1 1 1 1 1 2 2Why these channels connect to architecture (engineer version)

Architecture is expressed as stable structural habits in a repo:

- Role captures control-flow and structural conventions (how code is “shaped”)

- Depth captures layering and complexity distribution (where complexity is allowed to live)

- Token↔Role coupling captures how semantics are used structurally

(e.g., identifiers and dependencies showing up inside new control structures)

When a PR introduces a new abstraction style, paradigm, or control-flow habit, these channels stop matching the repo’s historical regularities.

That mismatch is what Revieko detects — and turns into review hotspots.

How Revieko detects drift (without drowning you in theory)

1) Build a repo-specific baseline

Revieko learns what is “normal” for your codebase from its history:

- structural habits

- typical control-flow shapes

- typical nesting patterns

- typical abstraction styles in each area

2) Compare each PR against that baseline

Revieko finds:

- structural pattern breaks (off-pattern structure)

- hotspots (the exact lines/regions driving the break)

- risk score (aggregated across files)

3) Output is review-native

No dashboards required to get value:

- PR comment summary

- per-file risk

- actionable hotspot list

What it looks like in a PR

You’ll see something like:

Revieko: High risk | risk=58.7 | hotspots=6

Top hotspots:

- pattern_break: new state manager / state validation introduced

- pattern_break: generator-based flow added in a module that was imperative

- pattern_break: callback/closure introduced inside a core loop

- pattern_break: strategy-style recovery logic introducedAnd then line-level pointers in context so a reviewer can ask the right question:

- “Why are we introducing this new abstraction here?”

- “Is this consistent with how this repo solves the same problem elsewhere?”

- “Are we mixing paradigms in a way that will confuse the next person?”

What Revieko is (and is not)

Revieko is:

- an architectural consistency checker

- a low-noise layer for PR review

- repo-specific, not generic “best practice” policing

Revieko is not:

- a linter replacement

- a security scanner

- a type checker

- a full architecture audit tool

It complements existing gates by adding the missing one:

architectural drift observability in PRs.

Install in ~2 minutes

-

Install the GitHub App

https://github.com/apps/revieko-architecture-drift-radar -

Choose a repository (or start with the demo repo)

Demo repository:

https://github.com/synqratech/revieko-demo-python -

Open or update a Pull Request

Revieko analyzes the PR automatically after installation. -

See architecture risk and hotspots in PR comments

Review structural pattern breaks directly in the PR conversation.

Quick links:

- Install: https://github.com/apps/revieko-architecture-drift-radar

- Demo: https://github.com/synqratech/revieko-demo-python

- Pilot: https://synqra.tech/revieko

Research foundation (optional)

Revieko’s structural signals are grounded in PhaseBrain — a research model for measuring structural regularity in codebases.

At a practical level, PhaseBrain treats a repository as a system with stable architectural patterns expressed through three orthogonal structural channels:

- Token patterns (what kinds of constructs appear and how they repeat),

- Structural roles (control flow, declarations, abstractions),

- Nesting depth and block structure (how complexity is layered).

Instead of analyzing lines or files in isolation, the model learns repo-specific structural baselines from historical code and detects when a pull request introduces changes that break those learned regularities — even if the code is syntactically valid, well-tested, and passes conventional review.

This allows Revieko to flag architectural drift as a measurable deviation from the repository’s own structure, not as a subjective opinion or a generic rule violation.

If you want the full mechanics, assumptions, and reproducible foundations:

- PhaseBrain paper (Zenodo):

https://zenodo.org/records/17820299

This section exists for engineers who want to verify that the signals are based on a concrete measurement model, not heuristic scoring or black-box LLM judgments.

Quick FAQ

Does this only work for Python?

No. The signals are structural and exist across languages (roles, nesting, semantics-in-structure). Coverage improves as the repo baseline grows.

Will it create noise?

The baseline is repo-specific, so the goal is to flag what’s abnormal for your codebase, not what’s “bad in general”.

Does it replace code review?

No. It makes review more focused by telling you where the architecture is being reshaped — so humans spend attention where it matters.